The size of the domain is $ n^r $ and the size of the range is $ m^s $. The range of E must be greater than or equal to the size of the domain or otherwise two different messages in the domain would have to map to the same encoding in the range. We derive the gravitational equations of motion of general theories of gravity from thermodynamics applied to a local Rindler horizon through any point in. $$L(m_A) = r, \space L(m_B) = s, \space m_B = E(m_A)$$ To calculate entropy changes for a chemical reaction We have seen that the energy given off (or absorbed) by a reaction, and monitored by noting the change in temperature of the surroundings, can be used to determine the enthalpy of a reaction (e.g. A convenient practical model for accurately estimating the total entropy (Si) of atmospheric gases based on physical action is proposed. To prove this is correct function for the entropy we consider an encoding $E: A^r \rightarrow B^s$ that encodes blocks of r letters in A as s characters in B. In this case the entropy only depends on the of the sizes of A and B.

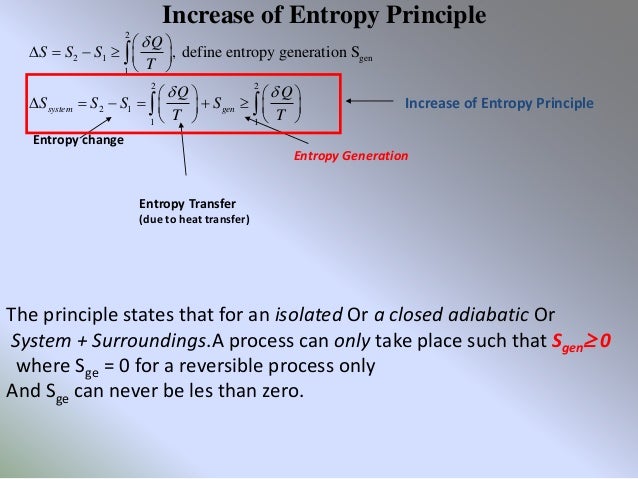

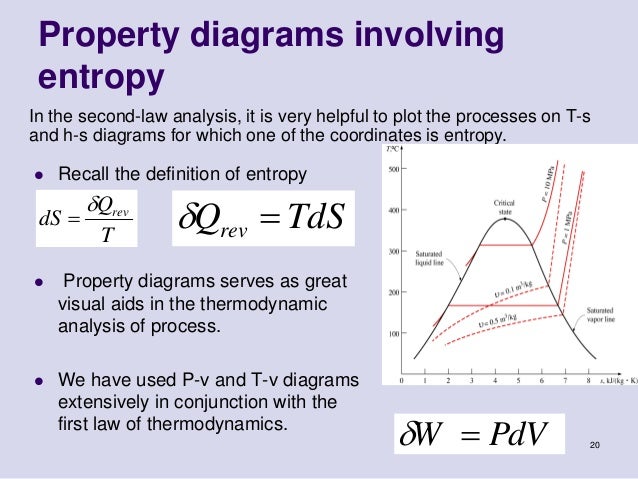

And if event $A$ has a certain amount of surprise, and event $B$ has a certain amount of surprise, and you observe them together, and they're independent, it's reasonable that the amount of surprise adds.įrom here it follows that the surprise you feel at event $A$ happening must be a positive constant multiple of $- \log \mathbb \log_m(n)$$ The function u is interpreted as the density of a (one-dimensional) uid, and F describes the ow speed as a function of. We think of x as a spatial variable, and t as time. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the microscopic description of nature in statistical physics, and. These equations can be a bit confusing, because we use the specific heat at constant volume when we have a process that changes volume, and the specific heat at constant pressure when the process changes pressure. Entropy is a scientific concept, as well as a measurable physical property, that is most commonly associated with a state of disorder, randomness, or uncertainty. It's reasonable to ask that it be continuous in the probability. PARTIAL DIFFERENTIAL EQUATIONS LECTURE 3 BURGER’S EQUATION: SHOCKS AND ENTROPY SOLUTIONS A conservation law is a rst order PDE of the form u t + xF(u) 0. Depending on the type of process we encounter, we can now determine the change in entropy for a gas. Ludwig Boltzmann (1844 1906) (OConnor & Robertson, 1998) understood this concept well, and used it to derive a statistical approach to calculating entropy. In this case, the reaction is highly exothermic, and the drive towards a decrease in energy allows the reaction to occur.How surprising is an event? Informally, the lower probability you would've assigned to an event, the more surprising it is, so surprise seems to be some kind of decreasing function of probability. Given that entropy is a measure of the dispersal of energy in a system, the more chaotic a system is, the greater the dispersal of energy will be, and thus the greater the entropy will be. Consequently, entropy can become a starting point for reflections, and the probability distribution maximising entropy can be used for statistical inference 10,11. It equals the total entropy (S) divided by the total mass (m). According to the drive towards higher entropy, the formation of water from hydrogen and oxygen is an unfavorable reaction. The entropy formula has a much deeper meaning than was initially believed, as it is completely independent of thermodynamics. The specific entropy (s) of a substance is its entropy per unit mass.

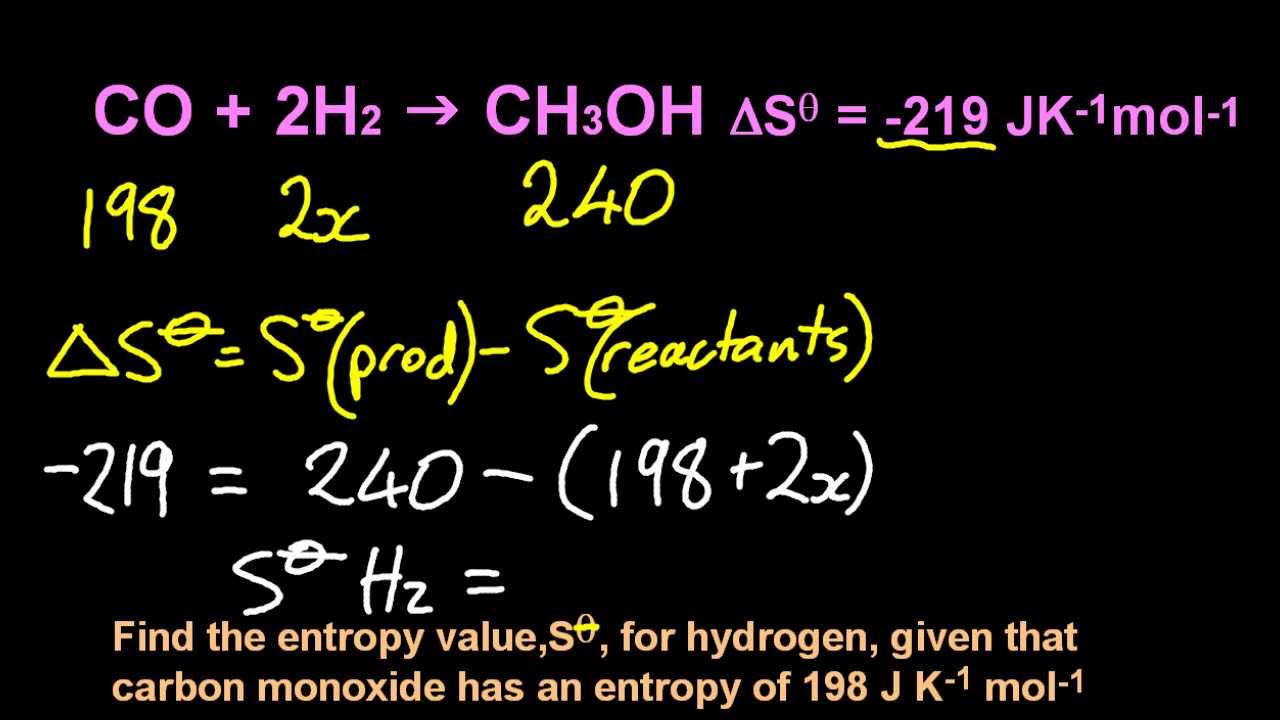

The entropy change for this reaction is highly negative because three gaseous molecules are being converted into two liquid molecules. Boltzmann proposed the following equation to describe the relationship between entropy and the amount of disorder in a system.

0 kommentar(er)

0 kommentar(er)